Today, I'm thrilled to announce the public beta launch of our most requested feature: S3 Compatible APIs for B2 Cloud Storage. If you have wanted to use B2 Cloud Storage but your application or device didn't support it, or you wanted to move to cloud storage in general but couldn't afford it, you should be able to instantly start with the new S3 Compatible APIs for Backblaze B2. Add Backblaze S3-compatible Storage Destination. In MSP360 Backup application menu, click Add Storage Account. Select Backblaze B2 (S3 compatible). Specify the credentials and S3 Endpoint for the new bucket. Now you can use Backblaze B2 backup storage for synthetic image-based backups. Enable Synthetic Backup.

Last updated November 06, 2020

Backblaze B2 Cloud Storage (B2) public and private buckets can be used as origins with Fastly.

TIP: Backblaze offers an integration discount that eliminates egress costs to Fastly when using Backblaze B2 Cloud Storage as an origin. In addition, Backblaze also offers a migration program designed to offset many of the data transfer costs associated with switching from another cloud provider to Backblaze. To ensure your migration has minimal downtime, contact support@fastly.com.

Before you begin

Before you begin the setup and configuration steps required to use B2 as an origin, keep in mind the following:

- You must have a valid Backblaze account. Before you can create a new bucket and upload files to it for Fastly to use, you must first create a Backblaze account at the Backblaze website.

- Backblaze provides two ways to set up and configure B2. B2 can be set up and configured using either the Backblaze web interface or the B2 command line tool. Either creation method works for public buckets. To use private buckets, however, you must use the B2 command line tool. For additional details, including instructions on how to install the command line tool, read Backblaze's B2 documentation.

- Backblaze provides two APIs for integrating with Backblaze B2 Cloud Storage. You can use the B2 Cloud Storage API or the S3 Compatible API to make your B2 data buckets available through Fastly. The S3 Compatible API allows existing S3 integrations and SDKs to integrate with B2. Buckets and their specific application keys created prior to May 4th, 2020, however, cannot be used with the S3 Compatible API. For more information, read Backblaze's article on Getting Started with the S3 Compatible API.

Using Backblaze B2 as an origin

To use B2 as an origin, follow the steps below.

Creating a new bucket

Data in B2 is stored in buckets. Follow these steps to create a new bucket via the B2 web interface.

TIP: The Backblaze Guide provides details on how to create a bucket using the command line tool.

- Log in to your Backblaze account. Your Backblaze account settings page appears.

- Click the Buckets link. The B2 Cloud Storage Buckets page appears.

Click the Create a Bucket link. The Create a Bucket window appears.

- In the Bucket Unique Name field, enter a unique bucket name. Each bucket name must be at least 6 alphanumeric characters and can only use hyphens (

-) as separators, not spaces. - Click the Create a Bucket button. The new bucket appears in the list of buckets on the B2 Cloud Storage Buckets page.

- Upload a file to the new bucket you just created.

NOTE: Buckets created prior to May 4th, 2020 cannot be used with the S3 Compatible API. If you do not have any S3 Compatible buckets, Backblaze recommends creating a new bucket.

Uploading files to a new bucket

Once you've created a new bucket in which to store your data, follow these steps to upload files to it via the B2 web interface.

TIP: The Backblaze Guide provides details on how to upload files using the command line tool.

- Click the Buckets link in the B2 web interface. The B2 Cloud Storage Buckets page appears.

- Find the bucket details for the bucket you just created.

- Click the Upload/Download button. The Browse Files page appears.

- Click the Upload button. The upload window appears.

- Either drag and drop any file into the window or click to use the file selection tools to find a file to be uploaded. The name and type of file at this stage doesn't matter. Any file will work. Once uploaded, the name of the file appears in the list of files for the bucket.

- Find your bucket's assigned hostname so you can set up a Fastly service that interacts with B2.

Finding your bucket's assigned hostname

To set up a Fastly service that interacts with your B2, you will need to know the hostname Backblaze assigned to the bucket you created and uploaded files to.

Find your hostname in one of the following ways:

Via the B2 web interface when you're using the standard B2 Cloud Storage API. Click the name of the file you just uploaded and examine the Friendly URL and Native URL fields in the Details window that appears. The hostname is the text after the

https://designator in each line that matches exactly.Via the command line and the B2 Cloud Storage API. Run the

b2 get-account-infocommand on the command line and use the hostname from thedownloadUrlattribute.Via the B2 web interface when you're using the S3 Compatible API. Click the Buckets link and find the bucket details for the bucket you just created. The hostname is the text in the Endpoint field.

Creating a Backblaze application key for private buckets

Your Backblaze master application key controls access to all buckets and files on your Backblaze account. If you plan to use a Backblaze B2 private bucket with Fastly, you should create an application key specific to the bucket.

NOTE: The Backblaze documentation provides more information about application keys. When creating application keys for your private bucket, we recommend using the least amount of privileges possible. You can optionally set the key to expire after a certain number of seconds (up to a maximum of 1000 days or 86,400,000 seconds). If you choose an expiration, however, you'll need to periodically create a new application key and then update your Fastly configuration accordingly each time.

Via the web interface

To create an application key via the B2 web interface:

- Click the App Keys link. The Application Keys page appears.

Click the Add a New Application Key button. The Add Application Key window appears.

- Fill out the fields of the Add Application Key controls as follows:

- In the Name of Key field, enter the name of your private bucket key. Key names are alphanumeric and can only use hyphens (

-) as separators, not spaces. - From the Allow access to Bucket(s) menu, select the name of your private bucket.

- From the Type of Access controls, select Read Only.

- Leave the remaining optional controls and fields blank.

- In the Name of Key field, enter the name of your private bucket key. Key names are alphanumeric and can only use hyphens (

Click the Create New Key button. A success message and your new application key appear.

- Immediately note the keyID and the applicationKey from the success message. You'll use this information when you implement header-based authentication with private objects.

Via the command line

To create an application key from the command line, run the create-key command as follows:

where <bucketName> <keyName> represents the name of the bucket and key you created. For example:

The keyID and the applicationKey are the two values returned.

NOTE: Application keys created prior to May 4th, 2020 cannot be used with the S3 Compatible API.

Creating a new service

To create a new Fastly service, you must first create a new domain and then create a new Host and edit it to accept traffic for B2. Instructions to do this appear in our guide to creating a new service. While completing these instructions, keep the following in mind:

- When you create the new Host, enter the B2 bucket's hostname in the Hosts field on the Origins page.

- When you edit the Host details on the Edit this host page, confirm the Transport Layer Security (TLS) area information for your Host. Specifically, make sure you:

- secure the connection between Fastly and your origin.

- enter your bucket's hostname in the Certificate hostname field.

- select the checkbox to match the SNI hostname to the Certificate hostname (it appears under the SNI hostname field).

- Also when you edit the Host, optionally enable shielding by choosing the appropriate shielding location from the Shielding menu. When using B2 Cloud Storage, this means you must choose a shielding location closest to the most appropriate Backblaze data center. For the data centers closest to:

- Sacramento, California (in the US West region), choose San Jose (SJC) from the Shielding menu.

- Phoenix, Arizona (in the US West region), choose Palo Alto (PAO) from the Shielding menu.

- Amsterdam, Netherlands (in the EU central region), choose Amsterdam (AMS) from the Shielding menu.

- Decide whether or not you should specify an override Host in the Advanced options area:

- If you're using the S3 Compatible API, skip this step and don't specify an override Host.

- If you're not using the S3 Compatible API, in the Override host field in the Advanced options, enter an appropriate address for your Host (e.g.,

s3-uswest-000.backblazeb2.comorf000.backblazeb2.com).

Using the S3 Compatible API

Using the S3 Compatible API with public objects

To use the S3 Compatible API with public objects, you will need to make sure the Host header contains the name of your B2 Bucket. There are two ways to do this, both of which require you to get your region name which will be the 2nd part of your S3 Endpoint. So if your S3 Endpoint is s3.us-west-000.backblazeb2.com, this means your region will be us-west-000.

- In the Origin you created set the Override host field in the Advanced options to

<bucket>.s3.<region>.backblazeb2.com(e.g.,testing.s3.uswest-000.backblazeb2.com) Create a VCL Snippet. When you create the snippet, select within subroutine to specify its placement and choose miss as the subroutine type. Then, populate the VCL field with the following code. Be sure to change specific values as noted to ones relevant to your own B2 bucket - in this case

var.b2Bucketwould be'testing'andvar.b2Regionwould be'uswest-000'.

Using the S3 Compatible API with private objects

To use a Backblaze B2 private bucket with Fastly, you must implement version 4 of Amazon’s header-based authentication. You can do this using custom VCL.

Start by obtaining the following information from Backblaze (see Creating a Backblaze application key for private buckets):

| Item | Description |

|---|---|

| Bucket name | The name of your Backblaze B2 bucket. When you download items from your bucket, this is the string listed in the URL path or hostname of each object. |

| Region | The Backblaze region code of the location where your bucket resides (e.g., uswest-000). |

| Access key | The Backblaze keyID for the App Key that has at least read permission on the bucket. |

| Secret key | The Backblaze applicationKey paired with the access key above. |

Once you have this information, you can configure your Fastly service to authenticate against your B2 bucket using header authentication by calculating the appropriate header value in VCL.

Start by creating a regular VCL snippet. Give it a meaningful name, such as AWS protected origin. When you create the snippet, select within subroutine to specify its placement and choose miss as the subroutine type. Then, populate the VCL field with the following code (be sure to change specific values as noted to ones relevant to your own AWS bucket):

Using the B2 API

Public Objects

You'll need to make sure the URL contains your bucket name. There are two ways to do this.

Backblaze B2

- Using a Header object.

Click the Create header button again to create another new header. The Create a header page appears.

- Fill out the Create a header fields as follows:

- In the Name field, type

Rewrite B2 URL. - From the Type menu, select Request, and from the Action menu, select Set.

- In the Destination field, type

url. - From the Ignore if set menu, select No.

- In the Priority field, type

20.

- In the Name field, type

- In the Source field type the

'/file/<Bucket Name>' req.url. - Click the Create button. A new Authorization header appears on the Content page.

- Click the Activate button to deploy your configuration changes.

Alternatively create a VCL Snippet. When you create the snippet, select within subroutine to specify its placement and choose miss as the subroutine type. Then, populate the VCL field with the following code. Be sure to change the variable to the name of your own B2 bucket.

Private Objects

To use a Backblaze B2 private bucket with Fastly, you must obtain an

Authorization Token. This must be obtained via the command line.- You'll now need to authorize the command line tool with the application key you obtained.

- You will now need to get an authorization token for the private bucket.

e.g

This will create a token that is valid for 86400 seconds (i.e 1 day), the default. You can optionally change the expiration time from anywhere between 1s and 604,800 seconds (i.e 1 week).

Take note of the generated token.

NOTE: You will need to regenerate an authorization token and update your Fastly configuration before the end of the expiration time. A good way to do this would be through Fastly's versionless Edge Dictionaries.

Passing a generated token to Backblaze

There are two ways you can pass the generated token to Backblaze. The first is using an Authorization header. This is the recommended method.

Click the Create header button again to create another new header. The Create a header page appears.

- Fill out the Create a header fields as follows:

- In the Name field, enter

Authorization. - From the Type menu, select Request, and from the Action menu, select Set.

- In the Destination field, enter

http.Authorization. - From the Ignore if set menu, select No.

- In the Priority field, enter

20.

- In the Name field, enter

- In the Source field, enter the Authorization Token generated in the command line tool, surrounded by quotes. For example, if the token generated was

DEC0DEC0C0A, then the Source field would be'DEC0DEC0C0A' - Click the Create button. A new Authorization header appears on the Content page.

- Click the Activate button to deploy your configuration changes.

Alternatively, the second way is to pass an Authorization query parameter.

Click the Create header button again to create another new header. The Create a header page appears.

- Fill out the Create a header fields as follows:

- In the Name field, enter

Authorization. - From the Type menu, select Request, and from the Action menu, select Set.

- In the Destination field, enter

url. - From the Ignore if set menu, select No.

- In the Priority field, enter

20.

- In the Name field, enter

In the Source field, enter the header authorization information using the following format:

Using the previous example, that would be:

- Click the Create button. A new Authorization header appears on the Content page.

- Click the Activate button to deploy your configuration changes.

Storage is a huge part of MD3’s Infrastructure and backups are a giant concern for us, that’s why we’ve been using AWS S3 for a long period of time.

Mediatree core business is media broadcast monitoring, which involves recording and process huge amounts of media files. At the moment the infrastructure has in production around 500 international TV and Radio channels, where we record all the native quality of the channels, and process them in order to extract metadata (EPG information, thumbnails, closed caption and speech to text) and make it available for our customers.

MD3 is responsible for the administration of the IT Infrastructure of our parent company Mediatree.

Mediatree stores up to 1 year of content of this data, which makes storage a huge part of our Infrastructure. At the time, we have around 500TB of data in our storage, which is heavily mixed between big large files (native .ts files with raw quality) and a huge amount of small files (around 300M of mainly .jpeg, .srt and .xml).

Storage solutions are typically designed to handle well one of each case (big files vs small files), which puts us in one extreme case where we need to handle both types of data with similar performance.

Due to these requirements on the storage part of our infrastructure, backups are an even greater concern for us. Our platform is on 24/7 with very high SLA’s, and even one downtime of a few minutes has an impact on the production because our customers expect us to always deliver the full content without any cut in the video.

Our approach to backups has been to follow the 3-2-1 Rule, where we try to always have 3 copies of the data, in 2 different formats and 1 offsite.

To address the offsite part, we have been using for a long period of time AWS S3. In this case, we have daily backups from our main datacenter into a few AWS S3 buckets. The backups are mainly the media files and exported disks of our VM’s that we run in our datacenter.

We are constantly searching for newer solutions to improve our services and if possible reduce our costs. In 2018 our team went to NAB show in Las Vegas to meet with a few customers and also search for new solutions and ways to improve our services.

One of the stands there was BackBlaze. We had an interesting discussion with a person from BackBlaze but at the time, they were only based in the USA and had no datacenters in Europe, so it made no sense for us to use their services.

We enjoyed the company approach to the storage problems they were trying to solve (cheaper yet reliable alternative to other cloud storage services like AWS S3) and we kept following them to see the progress they were making.

For us, in August 2019, they made a major step forward: to open the first BackBlaze European datacentre. Late 2019, we started testing BackBlaze B2 service, however, our tests showed us that there was a major inconvenience with it, it was not S3 API compatible, which prevented us from using it in some of the backup software that we had in our production.

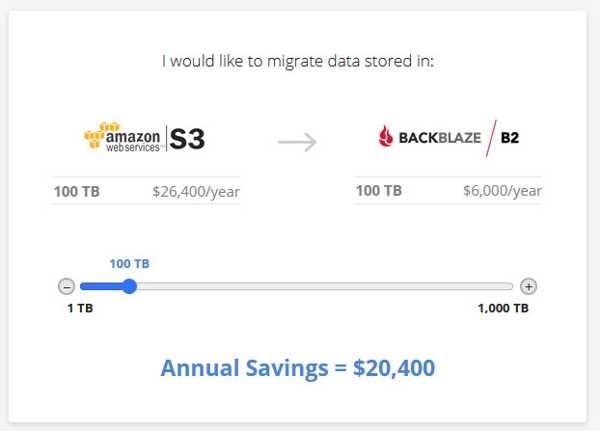

The second major step for us was in May 2020, where they announced that BackBlaze B2 was now compatible with S3 API. This fully allowed us to migrate our backups fully from AWS S3 into BackBlaze B2 service, allowing us to save up to 80% in our invoice.

Age of empires 2 definitive edition vs 2013.

The biggest advantage of BackBlaze B2 is the pricing model. When using BackBlaze we can have direct savings of 5x in comparison to AWS S3 and another big advantage is that the data egress pricing is also cheaper.

How to Setup AWS CLI to access BackBlaze B2

Requirements:

- Register account in BackBlaze:

Important note: The location of the account is defined in the registration. If you want your account to be located in Europe other than the US, you need to specify it at the registration phase.

- Access to BackBlaze Console.

- AWS CLI installed

- AWS CLI is available in the official package repository of Ubuntu 18.04 LTS, if it is not installed you can easily install it with “sudo apt update && sudo apt install awscli”

- Once inside the Backblaze console, we will go ahead and create our first bucket.

Select and Create Buckets

Inside the BackBlaze console, navigate to to “B2 Cloud Storage” and select “Buckets:

After selecting buckets, click on “Create a Bucket”

Insert the name of the backup and choose if you want your files to be public or private

At this stage, we should have our first bucket created. The important part for the rest of the tutorial is the “Endpoint”. This is the endpoint that we will have to set later when using the AWS S3 API in the CLI.

On the left menu, we need to navigate to “App Keys” to create our application keys, that in BackBlaze B2 are the equivalent of the AWS IAM Security keys.

By default, there is a Master Application Key. We will create a specific key that will be used only to access our new bucket.

Click on “Add a New Application Key”

A few parameters that can be set

While adding a new key, there are a few parameters that we can set:

Name of Key – the name of the key

Allow Access to Bucket – restrict the key to a specific bucket

Type of Access – give permission to Write/Read on the bucket

Allow List All Bucket Names – Allow this key to list all buckets in the account

Filename prefix – Allow only access to files that have a specific prefix

Duration – Specify access to files within the set duration

In our case, we will restrict the access to the bucket we created previously and give it Read/Write permissions.

After pressing “Create New Key” we should have a Success message that shows us our newly created key. From this, we need to save the KeyID and applicationKey

KeyID = AWS Access Key

ApplicationKey = AWS Secret Access Key

Our configuration work in the BackBlaze console is complete. We will keep it open to check later the result of our first file upload.

Now onto the AWS CLI configuration. We will add a new profile that will be used to access the BackBlaze B2 bucket.

To do this, we need to add a profile into our aws cli configuration that is usually located in our home folder ~/.aws

Open the file ~/.aws/config

add the following in the bottom of the file:

[profile b2]

region = eu-central-003

output = son

We should have something like:

Now on our credentials file at ~/.aws/credentials we will add:

[b2]

aws_access_key_id = 003bc0ef44688310000000005

aws_secret_access_key = [applicationKey]

The applicationKey is the value that we saved previously when we created the Key in BackBlaze Console.

The configuration steps are completed and now we should be able to use the AWS CLI to access our BackBlaze B2 storage.

In order to use it, we need to specify the –endpoint URL and also the profile

Example commands:

Backblaze S3 Compatible Headphones

- List all buckets: aws s3 ls –endpoint https://s3.eu-central-003.backblazeb2.com –profile b2

Backblaze S3 Compatible Devices

- Copy a file: aws s3 cp file1 s3://backblaze-s3-api –endpoint https://s3.eu-central-003.backblazeb2.com –profile b2

In BackBlaze Console we can navigate to “Browse Files” and select our bucket “backblaze-s3-api” and we should be able to see our newly uploaded file.

Using BackBlaze B2 service could be a good alternative for a cheaper cloud backup solution and because most backup software already has support for AWS S3 storage it should be possible to migrate from AWS S3 to BackBlaze B2 easily.

© Images from https://www.backblaze.com/

This is an article written by Gonçalo Dias, Software Engineer@md3.

Gonçalo has 7 years of experience in systems administration, with a special taste for Linux systems and high availability. He is the MD3’s Head of IT, and is responsible for the management of the entire infrastructure of the Mediatree group and for the continuous development of all solutions and technologies used.